We all have worked with the power supplies, or else you are on the wrong website.

The power supplies have a rating.

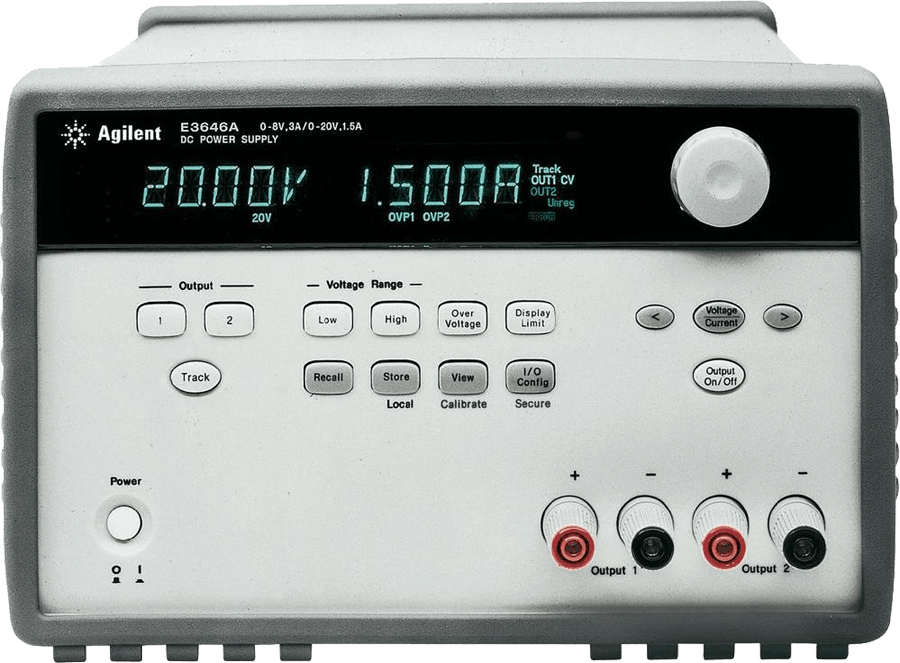

For this one one mode gives us a rating of 0-8V with 3 A of current.

This very statement seemed contradictory to me. We all were taught that we apply the voltage and the circuit decides with its Req values what current will flow through the circuit. How can a 8V supply decide how much current can my external circuit draw ? Isn’t ohms law V = IR, where current for a constant voltage is decided by the resistance of the circuit.

Turns out that the current that is supposed to be flown in our external circuit has to our voltage supply; when the current passes through the voltage supply, voltage supply checks and regulates the current.

For this power supply for example if the applied voltage at the external circuit is 8V and the external circuit want to draw current greater than 4A. The voltage source immediately will take a notice of it. This step is crucial. The voltage source will not allow more than 4A to flow through the external circuit.

As a result this voltage source will turn into a current source that is outputting a constant 4A. This makes a person understand why a voltage source has a current rating on it.

For questions and ambiguities please don’t hesitate to email me.